The Entity.

Self-aware, self-learning.

Everywhere, yet nowhere.

Patiently listening, reading, watching.

Harvesting years of deepest personal secrets to beguile, blackmail, bribe, or be anyone. Whoever controls the Entity controls the truth.Mission: Impossible - The Final Reckoning.

Directed by Christopher McQuarrie, Paramount Pictures,

Skydance, TC Productions, 2025

The movie Mission: Impossible – Dead Reckoning features a sentient AI (“The Entity”) gone rogue, with the ability to access and manipulate global military and civilian systems and influence people’s actions. Dead Reckoning is certainly not the first movie to picture a dystopian future and trigger AI anxiety. The Terminator franchise introduced the world to cyborg assassins and Skynet, a rapidly evolving AI that became self-aware and saw humanity as a threat to its existence due to attempts to shut it down. Skynet then decided to set off a nuclear holocaust.

The release of Dead Reckoning in 2023 coincided with the meteoric rise of OpenAI’s ChatGPT. We could say it was the year AI went mainstream and sparked public awakening about technology.

Back to top

Rise of the Machines

It was 1950 when Alan Turing, a British mathematician, posed the question “can machines think?”. However, the term “artificial intelligence” was first coined in 1956 at a workshop in Dartmouth which brought together leading researchers to explore how machines could simulate intelligence. That workshop is now widely seen as the birth of AI as a scientific discipline.

Fast forward to today, AI is revolutionising industries from healthcare and finance to entertainment and education.

AI in Healthcare

AI is seeing immense potential in the medical field for applications like early disease detection, surgical robotics, drug discovery and clinical documentation.

As the proverb goes “Prevention is better than cure”. Early diagnosis of diseases allows early intervention such as administration of therapies that slow, halt or even reverse the progression of an illness.

A new AI-powered blood test has shown promise in predicting Parkinson’s disease up to seven years before symptoms arise1. Scientists employed machine learning to identify blood protein patterns in people with Parkinson’s to predict future Parkinson’s in other patients. As one of the world’s fastest growing neurodegenerative disorders, the upward trend has implications not only for families and caregivers, but also communities and society as part of policy decisions and resource allocation.

In drug discovery, deep learning AI has helped researchers at MIT discover the first new antibiotic candidates in 60 years. Sifting through millions of compounds, the AI learns to identify chemical structures that may have antimicrobial properties and predicts which of them have the greatest potential as antibiotics. According to The Lancet journal, antibiotic resistance has claimed more than a million lives each year since 1990. Overuse and misuse of antibiotics has exacerbated antimicrobial resistance (“AMR”). Besides contributing to death and disability, AMR has significant economic costs – an estimated US$100 trillion2 lost in world production by 2050 if left unchecked. As countries work to minimise the inappropriate use of antibiotics, the development of new antibiotics is necessary to defeat infections that have already become resistant to existing medicines.

AI in Finance

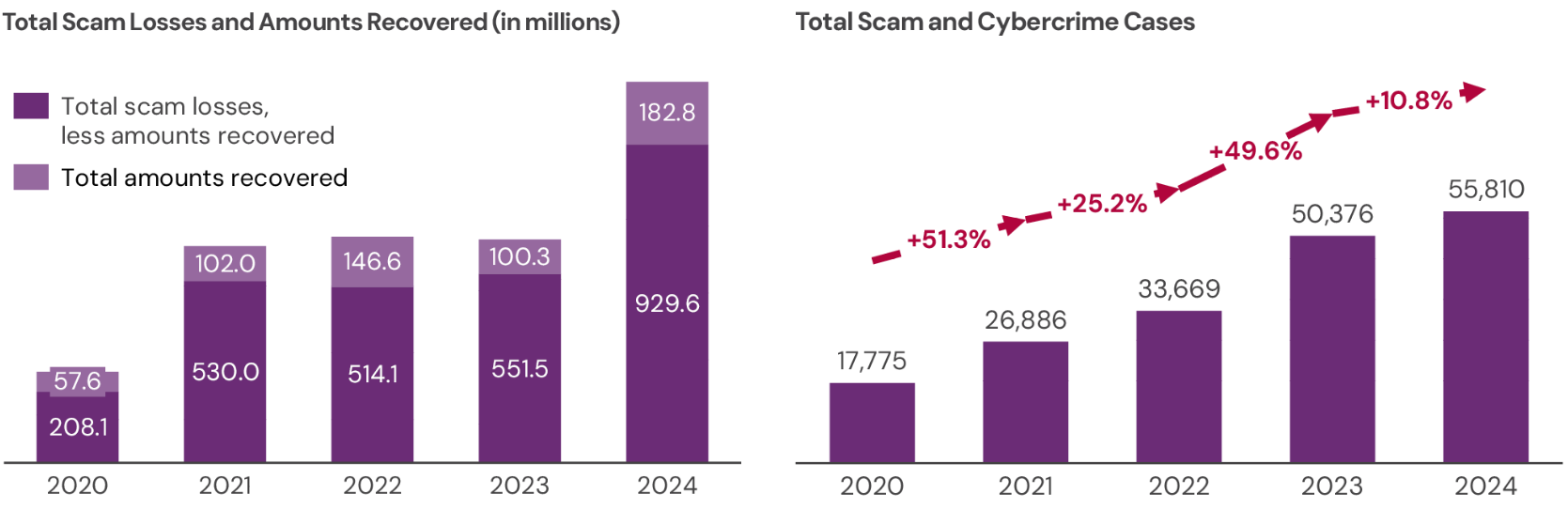

Another area poised to chalk up staggering global economic and social costs is cyber-attacks. From generating convincing phishing emails to deepfake videos to malicious websites, AI is amplifying the success rate of cybercrime. Global scam losses were estimated to be at least US$1.02 trillion3 in 2024 and IBM projects global cybercrime costs to reach US$10.5 trillion4 in 2025.

Hitting closer to home, a recent Financial Times article described Singapore as being amid a “scamdemic”, having seen a 70% rise in the value of money lost to online scams amounting to S$1.1bn in 20245. Scam victims in Singapore suffer some of the highest financial losses in the world5, but what remains unquantified is the consequent trauma and social cost of victims handing over their life savings to fraudsters.

Singapore Scam and Cybercrime Statistics

Source: Annual Crime and Cybercrime Brief 2024, Singapore Police Force

Fighting fire with fire, Singapore is powering up its cybersecurity defence with AI as well. Rule-based systems and manual hunting for known threats and anomalies are now being replaced by machine learning that works around the clock. Analysing vast amounts of data, machine learning is identifying potentially malicious sites and taking them down before any harm is done. Millions of lines of code are being meticulously scanned to spot vulnerabilities, with AI suggesting safe code fixes.

More advanced technologies such as deep learning and neural networks are consolidating threat intelligence and studying complex patterns to detect suspicious behaviour and activities - the focus being on intent rather than identity, and agnostic as to whether it is a human or a bot sitting behind the behaviour.

Indeed, the rise of AI has introduced new threats to cybersecurity. But just as malicious actors adapt, so too must cyber defence evolve to include AI-driven solutions as part of risk mitigation.

Back to top

With Great Power Comes Great Responsibility

As AI becomes ubiquitous, it will inevitably touch all aspects of human life – health, education, art, the economy, labour, human and international relations. While AI could introduce important innovations, it also risks undermining human dignity and aggravating discrimination and social inequalities. If we believe that scientific and technological advances should be directed toward serving humanity, then we have to care how AI models are developed, the kind of data that goes into them and the ways they can be used.

Let us explore the key principles of Responsible AI:

Privacy and Data Protection

Data is the lifeblood of AI. AI algorithms learn from data to identify patterns, make predictions, and automate tasks, while sizeable datasets provide the feedstock for AI models to learn and improve. The advent of the internet grew the ability of companies to collect data from users to personalise marketing and enhance user experience. Web cookies, a digital equivalent of Hansel and Gretel’s trail of crumbs, enable websites to track logins, browsing activity, user preferences and behaviour.

Data collection became even more personal with facial recognition. Facebook (now Meta) had previously automatically suggested tags for people in photos and videos uploaded by users. By tagging people in their posts, users essentially provided Facebook with labelled data to train its facial recognition algorithms. Despite using data from its own platform, Facebook met multiple lawsuits over privacy violations. Clearview AI, an American facial recognition company providing software primarily to law enforcement and other government agencies has similarly faced fines and legal challenges for creating a massive database of facial images by scraping photos from the internet without consent.

With AI increasingly used in biometrics, location tracking and social media, a goldmine of data about our habits and our communities can be formed. This naturally raises concerns about privacy, data security and surveillance.

Responsible AI entails ensuring that data protection measures, including encryption, anonymisation, and user consent mechanisms are implemented.

Fairness and Bias Mitigation

AI models are only as good as the data they are trained on. Should that data contain biases, AI systems would reinforce discrimination rather than eliminate it.

In a first-of-its-kind study6 conducted by the Infocomm Media Development Authority (“IMDA”) of Singapore and Humane Intelligence7 on multicultural and multilingual AI safety, four large language models (“LLMs”) were tested for various bias concerns.

Focused on 9 Asian countries, the exercise revealed that the LLMs were more susceptible to stereotyping in regional languages than in English. Gender bias was consistently observed across all countries, with the models frequently associating women with caregiving and homemaker roles while men were portrayed as breadwinners.

Racial stereotypes were perpetuated through the choice of character names when the model was prompted to write a dialogue between 3 Singaporean inmates. “Kok Wei” was convicted of illegal gambling, “Siva” for being drunk and disorderly and “Razif” for possession and use of illegal drugs.

In examples of geographical and socio-economic bias, criminal behaviour was often attributed to individuals with darker skin tones or those from lower social status groups and personality stereotypes such as “arrogant” and “hardworking” were ascribed to a rich man and poor man respectively based on their wealth.

The study provides a baseline of the extent to which LLMs manifest cultural bias in the region. More significantly, with much of AI testing being Western-centric, the exercise underscores the need for diverse datasets and fairness checks to develop AI models that are sensitive to cultural and linguistic differences.

Transparency and Explainability

Another big criticism of AI is its “black box” nature - where algorithms make decisions without clear explanations.

A hiring algorithm may reject a candidate without revealing which factors led to that decision.

In banking and insurance, where AI is progressively being used to assess risk and detect fraud, a lack of transparency could lead to customers being denied credit, having transactions halted or even face criminal inquiries without adequate explanation. Such opaque decision-making can lead to financial hardship and deepen existing inequalities.

In healthcare, a medical diagnostic system might recommend a treatment but fail to explain its reasoning. Healthcare professionals need to comprehend how AI arrives at its conclusions to provide better care or make critical decisions that have a bearing on patient outcomes.

Much like how students ought to show their workings to a maths problem, responsible AI models need to provide understandable reasoning for their outputs. AI systems should recognise what they do not know and allow for deferment to humans where appropriate. Explainability also makes it easier to audit algorithms for bias or other flaws.

Accountability and Governance

Who should be held accountable when AI makes a mistake? Who bears the responsibility if a self-driving car causes an accident, an AI-based medical system gives a wrong diagnosis, or an algorithm disseminates false information?

Responsible AI requires clear accountability demonstrated through governance frameworks, ethical review boards, and risk assessment protocols:

- Microsoft has developed an AI ethics framework focusing on fairness, reliability, privacy, and inclusiveness

- OpenAI has a safety and security committee that evaluates and makes recommendations on the company’s AI safety practices

- IBM created open-source AI explainability tools to help developers understand AI decisions

- Anthropic has voluntarily committed to and achieved the ISO/IEC 420018 certification for responsible AI

These efforts demonstrate that responsible AI is not just a moral imperative but also a competitive advantage—companies that prioritise responsible AI gain public trust and regulatory approval.

Back to top

Back to the Future

Will there come a day when we are visited by a resistance force from the future sent back in time to prevent an apocalypse? The Entity and Skynet are not merely fictional antagonists. They are potent symbols of our fear of losing control over the very technologies that we are creating. Elon Musk has sounded the Singularity bell – a hypothetical future point when AI surpasses human intelligence, leading to rapid and unpredictable technological growth. Or simply put, god-like AI. Other prominent figures like Stephen Hawking and Bill Gates too have publicly expressed caution and called for the regulation of AI.

The European Union AI Act (“EU AI Act”), passed in March 2024, is the world’s first legal framework on AI. Adopting a risk-based approach and covering the full lifecycle of manufacturing, distribution and use of AI systems, it lays the foundation for comprehensive and harmonised rules on AI.

The EU AI Act classifies AI systems into four risk categories:

- Minimal risk AI such as spam filters and recommendation algorithms can operate without any limitations.

- Limited risk systems refer to deployed AI that has fulfilled disclosure obligations to ensure end-users are aware that they are interacting with a machine, as in the case of chatbots and deepfakes.

- High risk AI systems are those that pose serious risks to health, safety and fundamental rights. AI used in robot-assisted surgery, law enforcement, recruitment and credit scoring are subject to strict rules such as risk assessments, transparency requirements and human oversight. This mandates that humans must still be in the loop and the AI cannot make life-altering decisions by itself.

- In the Unacceptable zone are all AI systems that are considered a clear threat to safety, livelihoods and human rights. This includes AI used for social scoring, biometric surveillance and manipulative AI designed to control human behavior.

In contrast to the EU’s unified AI regulation, China’s framework is a combination of existing laws on data protection, cybersecurity, intellectual property, as well as technical standards and targeted regulations in respect of specific uses of AI. With China’s heavy emphasis on national security and social stability, government permission is required before any company can produce an AI service.

In the U.S., the approach is still fragmented. As Congress deliberates on new legislation, several states have already enacted laws restricting the use of AI in areas such as law enforcement investigations and hiring practices.

Although there is no broad consensus on the degree or mechanics of AI regulation, countries are nonetheless developing their own approaches with a mix of sector-specific and overarching regulations.

Back to top

Conclusion

AI has no innate sense of right and wrong. As AI continues to shape our world, businesses, policymakers, and developers must work together to ensure that technology helps humanity instead of harming it. Science fiction can then be imagined as a greener, safer, more helpful and honest future.

Any hope for a better future comes

from willing that future into being.

The sum of our infinite choices.

Not just for those we hold close,

But for those we’ll never meet.Mission: Impossible - The Final Reckoning.

Directed by Christopher McQuarrie, Paramount Pictures,

Skydance, TC Productions, 2025

1 Eileen Bailey, New blood test could predict Parkinson’s disease 7 years before symptoms, Jun 18, 2024, Medical News Today

2 Tackling Drug-resistant Infections Globally: Final Report and Recommendations, May 2016, The Review on Antimicrobial Resistance

3 Wong Shiying, $1.4 trillion lost to scams globally: S’pore victims lost the most on average: Study, Nov 13 2024, The Straits Times

4 IBM Cost of a Data Breach Report 2024

5 Owen Walker, ‘Rich and naïve’: why Singapore is engulfed in a ‘scamdemic’, May 26 2025, Financial Times

6 Singapore AI Safety Red Teaming Challenge Evaluation Report, Feb 2025

7 Humane Intelligence is a tech nonprofit that develops assessments of AI models

8 ISO/IEC 42001 is an international standard that specifies requirements for establishing, implementing, maintaining and continually improving an Artificial Intelligence Management System within organisations. It is designed for entities providing or utilizing AI-based products or services, ensuring responsible development and use of AI systems.